Explainable AI in Complex Systems: The 2 in 1 Lego Collectable

Introduction: Building Blocks of Understanding - The Emergence of AI and the Imperative of Explainability

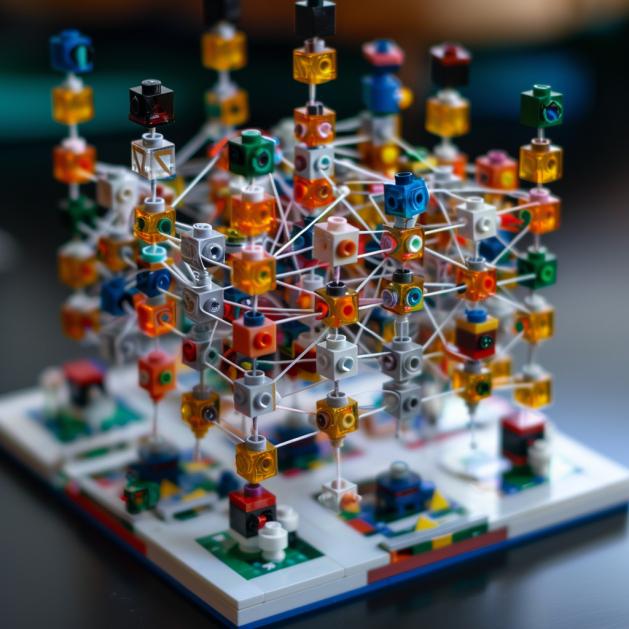

In the intricate world of technology, artificial intelligence (AI) stands as the cornerstone of innovation, powering complex systems from automated healthcare diagnostics to sophisticated financial models. As these AI-driven systems increasingly become the backbone of critical decision-making processes across industries, their complexity burgeons, often obscuring the rationale behind their conclusions. This opacity poses a significant challenge, much like a complex Lego set without an instruction manual, leaving users puzzled about how to piece together the myriad blocks of data and algorithms.

Enter Explainable AI (XAI), a concept as revolutionary as the advent of 2-in-1 Lego sets that not only offer the pleasure of building but also the flexibility to reconfigure and understand each component's role in the final masterpiece. XAI seeks to peel back the layers of AI's complexity, making the decision-making process transparent and interpretable. It aspires to transform AI systems from inscrutable black boxes into glass boxes, where every decision, no matter how complex, can be deconstructed, understood, and trusted by its users.

The importance of XAI cannot be overstated. In a landscape where AI's influence permeates every facet of our lives, the ability to explain and understand AI decisions is paramount. It ensures that AI systems remain accountable, ethical, and aligned with human values and regulations. Moreover, it democratizes AI, making it accessible not just to data scientists and engineers but to all stakeholders, regardless of their technical expertise.

This article aims to serve as a comprehensive guide to developing explainable AI in complex systems. It will explore how XAI can be systematically integrated into AI systems, enhancing their decision-making capabilities while ensuring their operations are transparent and interpretable. Through this exploration, we'll discover how XAI not only fortifies the trustworthiness and reliability of AI but also enriches our understanding of its intricate workings, much like the joy and insight gained from assembling and reconfiguring those beloved Legos.

Every majestic Lego set needs a manual : Focus on performance in AI often outweighs explainability

I. The Unboxing: Understanding Explainable AI

In the realm of modern technology, where artificial intelligence (AI) constructs systems as intricate and awe-inspiring as the Star Wars Millennium Falcon Lego set, Explainable AI (XAI) emerges as the critical instruction manual that allows us to comprehend and trust the marvels we've built. Like the detailed guides that enable Lego builders to understand each piece's purpose and place, XAI provides the blueprint for deciphering the decision-making process of AI, transforming it from inscrutable machinery into an open book.

Definition and Importance of Explainable AI

At its core, explainable AI are like the transparent bricks in a Lego set, allowing light to shine through and illuminate its inner workings. XAI demystifies AI by making its operations understandable to humans, shedding light on how data inputs are transformed into decisions. This clarity is not just for aesthetic pleasure, but serves practical and profound purposes.

Explainability is critical in AI systems for several reasons:

- Trust: Just as a Lego creator places each brick with confidence in its role, stakeholders must trust that an AI system's decisions are made on a sound basis. XAI builds this trust by revealing the 'why' behind decisions, ensuring users can rely on AI as a dependable tool rather than a mysterious black box.

- Regulatory Compliance: In many industries, particularly those with stringent safety and ethical standards, being able to explain decisions made by AI systems is not just beneficial but mandatory. Regulations like the GDPR's "right to explanation" are the equivalent of safety standards in toy manufacturing, ensuring products are not just effective but also safe and understandable.

- Debugging and Improvement: Understanding how an AI system arrives at its conclusions is crucial for diagnosing errors and areas for enhancement. Just as a misplaced Lego piece can be identified and corrected during the building process, XAI allows developers to pinpoint inaccuracies or biases in AI decision-making, paving the way for refinements.

The importance of XAI is clear. It ensures that AI, our most advanced technological 'toy', is not only capable of making complex decisions but also of being dissected and understood. This foundational understanding of XAI sets the stage for exploring how we can implement it in complex systems, ensuring that AI's power is matched by our ability to understand and trust its operation.

Simultaneous Builders : XAI expands trust and adoption of AI solutions

Challenges of Explainability in Complex AI Systems

The beauty and efficacy of AI systems often lie in their complexity. Yet, this complexity brings to the forefront the challenge of explainability, particularly in the realms of advanced AI models like deep learning.

- Complexity of Models: Deep learning networks, with their layers upon layers reminiscent of the countless interlocking bricks in a massive Lego castle, are notoriously difficult to interpret. Each neuron and layer might contribute in an opaque manner to the final outcome, much like how a single Lego piece's position might not reveal its purpose until the entire model is viewed. This intricacy is a double-edged sword; it allows for the handling of vast and nuanced datasets but obscures the reasoning behind specific decisions.

- Notation for approximating complexity: The complexity of a deep learning model can be hinted at by considering the number of parameters in a network, which grows exponentially with each added layerand neuron, roughly approximated as. This exponential growth not only showcases the model's capacity for nuanced decision-making but also its potential for obfuscation.

- Notation for approximating complexity: The complexity of a deep learning model can be hinted at by considering the number of parameters

- Trade-offs Between Performance and Explainability: Achieving the delicate balance between a model's performance and its transparency is akin to choosing between a Lego model that is stunning in its complexity but difficult to understand, and one that is simpler, less intricate, but whose every piece has a clear purpose. High-performance AI models often operate through mechanisms that, while effective, do not lend themselves easily to human interpretation.

The endeavor to illuminate the workings of AI, to break down the models into understandable segments without sacrificing their integrity or performance, mirrors the challenge of disassembling a complex Lego build without losing the essence of what makes it remarkable. As we delve deeper into the realms of AI, the quest for balance continues—seeking ways to keep the sophisticated architecture of our models while making each component, each decision, as transparent and comprehensible as a well-documented Lego instruction booklet.

II. Reading the Manual: The Fundamentals

Types of Explainability

- Model-specific vs. Model-agnostic Explanations: This distinction mirrors the contrast between following the specific instructions included with a Lego set and improvising creations with a box of assorted pieces. Model-specific explanations delve deep into the inner workings of a particular model i.e. following a set's instruction booklet step by step. In contrast, model-agnostic approaches apply universally, like using general building principles to assemble pieces from any set into new forms. These techniques allow insights into AI behavior regardless of the underlying architecture, offering a toolkit for understanding that transcends the boundaries of individual models.

- Example: A model-specific explanation for a neural network might involve visualizing the activation of particular neurons when an image is processed. Meanwhile, a model-agnostic method like LIME (Local Interpretable Model-agnostic Explanations) generates insights by perturbing the input data and observing changes in output.

- Global vs. Local Explainability: The distinction between global and local explainability mirrors the difference between understanding the overarching design principles of a Lego masterpiece and grasping the purpose of each individual brick. Global explainability provides a comprehensive view of how a model makes decisions across all possible inputs. In contrast, local explainability focuses on specific instances of decision-making, examining why a model made a particular prediction for a single input.

- Mathematical Illustration: For a given model , global explainability seeks to understandover all, while local explainability targetsfor a specific. This could be visualized through techniques such as SHAP (SHapley Additive exPlanations), which assign each feature an importance value for a particular prediction.

- Mathematical Illustration: For a given model

In navigating the landscape of explainable AI, these fundamental concepts serve as both the bricks and the blueprints, the individual pieces and the overarching design principles. They allow us to assemble AI systems that are not only powerful and effective but also transparent and interpretable, ensuring that the advanced technologies we develop are as comprehensible as a beloved Lego set, with all the joy of discovery that entails. This approach not only fosters trust and accessibility but also opens the door to a deeper collaboration between humans and machines, paving the way for advancements that are both innovative and inclusive.

Key Techniques and Tools

- LIME (Local Interpretable Model-agnostic Explanations): LIME dissects the prediction of any machine learning model by creating an interpretable model around the prediction. It operates by generating perturbations around a chosen instance, predicting outcomes for these variations using the original model, and weighing these perturbed samples by similarity. An interpretable model is then trained on this new dataset, with the outcome providing insights into the specific prediction. LIME's strength lies in its model-agnostic capability, allowing it to deliver local explanations for any model's decisions, thereby enhancing transparency and interpretability by indicating which features most influenced a particular prediction.

# Example of using LIME with a classification model

from lime import lime_tabular

explainer = lime_tabular.LimeTabularExplainer(training_data=X_train.values,

feature_names=X_train.columns,

class_names=['Negative', 'Positive'],

mode='classification')

exp = explainer.explain_instance(data_row=X_test.values[0], predict_fn=model.predict_proba)

exp.show_in_notebook(show_table=True)

- SHAP (SHapley Additive exPlanations): SHAP values offer a cooperative game theory approach to attribute the output of a model to its input features, essentially dividing the prediction among the features like a fair division of credit. SHAP provides both global insights into the model and detailed explanations for individual predictions. It starts with selecting an instance for which the prediction needs to be explained, then systematically considers all possible combinations of input features to assess their individual contributions. By computing the difference in the model's prediction with and without each feature across all subsets, SHAP assigns each feature a Shapley value, reflecting its impact on the prediction. These values are averaged to determine the feature's overall contribution. SHAP values are model-agnostic and provide both local explanations for individual predictions and global insights when aggregated.

# Example of using SHAP with a regression model

import shap

explainer = shap.TreeExplainer(model)

shap_values = explainer.shap_values(X_train)

shap.summary_plot(shap_values, X_train, plot_type="bar")

Piece Glows in the Dark : Model agnostic techniques allow for explainability independent of model type

Introduction to Tools and Libraries

- Alibi: Alibi is an open-source Python library designed to offer algorithms and tools for XAI. It supports feature importance explanations with SHAP values, provides understandable rules with Anchor Explanations, generates counterfactual instances to illustrate how input changes affect predictions, and detects concept drift to monitor model performance over time. Additionally, Alibi facilitates causal inference to distinguish between correlation and causation, enhancing decision-making processes. Its model-agnostic nature ensures compatibility with popular machine learning frameworks, and it comes with visualization tools for clear communication of insights. Alibi empowers practitioners to build transparent, fair, and reliable AI systems by embedding explainability into machine learning workflows.

# Example of using Alibi for generating counterfactual explanations

from alibi.explainers import CounterFactualProto

cf = CounterFactualProto(model, shape=(1,) + X_train.shape[1:], use_kdtree=True)

explanation = cf.explain(X_test[0].reshape(1, -1))

- ELI5: ELI5 is a Python package that helps to debug machine learning classifiers and explain their predictions. Its name, "ELI5," stands for "Explain Like I'm 5," reflecting its goal to make model explanations accessible and understandable. ELI5 supports a variety of machine learning frameworks, including scikit-learn, XGBoost, and Keras, among others. It offers functionalities such as displaying weights of features in linear models and decision trees, visualizing image explanations for image classifiers, and providing text explanations for text-based models. Additionally, ELI5 facilitates the implementation of permutation importance, a technique for assessing feature importance by measuring the increase in model prediction error after permuting the feature's values. Through its straightforward and intuitive interface, ELI5 aids in interpreting model behavior, identifying feature importances, and diagnosing model performance issues, making it a valuable tool for machine learning practitioners seeking to enhance model transparency and accountability.

# Example of using ELI5 to explain predictions

import eli5

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

model.fit(X_train, y_train)

eli5.show_weights(model, feature_names=X_train.columns.tolist())

Through the application of these techniques and tools, the development of explainable AI becomes an engaging process of assembly and discovery. Each technique adds clarity and understanding, while the tools available make the process accessible and practical, allowing for the creation of AI systems that not only make decisions but also reveal the logic behind them in a manner as comprehensible and modular as a sprawling Lego masterpiece.

III. Assembly Strategy: Practical Steps

Designing with Explainability in Mind

The cornerstone of creating an explainable AI system lies in the initial blueprint—it's about laying the groundwork with clarity and transparency as core objectives.

Flip Build : Planning for explainability first mitigates future risks of black box solutions

- Best Practices for Building Explainable Models:

- Begin with the End in Mind: Just as a Lego builder envisions the completed project, start by defining the explainability goals and how they align with the system's objectives. Determine whether global or local explainability is required and the stakeholders' needs in understanding AI decisions.

- Simplicity is Key: Opt for simpler models when feasible, as they naturally lend themselves to easier interpretation. While complex models like deep learning have their place, simpler models, often provide sufficient accuracy with the added benefit of being more transparent.

- Feature Selection and Engineering with Care: Choose and engineer features in a way that they convey meaningful insights. Each feature should have a clear reason for inclusion, enhancing the model's explainability.

- Incorporating Explainability in the Model Design Phase:

- Select Appropriate Algorithms: From the onset, prefer algorithms known for their interpretability. Decision trees, linear models, and rule-based models are the Lego bricks that are easy to track and understand, making them ideal choices for explainable projects.

- Use Model-Agnostic Methods: Tools like LIME and SHAP offer versatility by providing insights into any model's decision-making process. Implementing these tools during the design phase prepares the AI system for future analysis.

- Plan for Post-hoc Analysis: Design models with the understanding that further analysis will be necessary. This involves keeping detailed logs of model inputs, decisions, and changes over time, analogous to keeping a detailed instruction booklet for a complex Lego kit, enabling users to backtrack and understand each step.

In implementing these practices, the goal is to construct AI systems where the decision-making process is as accessible and comprehensible as a well-crafted Lego set, with each component's role and contribution clearly defined and understood. This approach not only fosters trust and adoption among users but also ensures compliance with evolving regulatory standards, securing a place for AI as both a powerful and interpretable tool in complex system applications.

Integrating Explainability into Existing Complex Systems

In the grand scheme of developing explainable AI, retrofitting existing complex systems with interpretability is like turning a Hogwarts Castle Lego set to LOTR's Barad-Dur. It requires careful deconstruction, thoughtful modification, and meticulous reconstruction to ensure the original functionality remains intact while adding the newfound clarity and transparency.

- Strategies for adding explainability to pre-existing AI models:

- Post-hoc Explanation Techniques: Techniques such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) can provide insights into the decision-making process of black-box models without requiring fundamental changes to the model architecture. These methods analyze model predictions on specific instances and generate explanations based on feature importance or contribution.

- Proxy Model Approach: Similar to building a complementary Lego set that fits seamlessly with the existing structure, creating a proxy model can offer a simplified version of the complex system, providing a more interpretable alternative. This proxy model, often a simpler and more transparent model like decision trees or logistic regression, mirrors the behavior of the original model while being easier to understand and interpret.

Piece not in Bag : Using proxy models can address explainability post build

- Case studies/examples of successful integrations:

- Financial Sector: In the banking industry, where complex machine learning models are used for credit scoring, integrating explainability techniques has become crucial for regulatory compliance and customer trust. By incorporating post-hoc explanation methods like LIME, banks can provide customers with transparent insights into credit decisions, ensuring fairness and accountability.

- Healthcare: Healthcare systems rely heavily on AI for diagnosis and treatment recommendations. However, opaque models pose challenges in understanding and trusting AI-driven decisions. By retrofitting existing models with proxy models or leveraging techniques like SHAP, healthcare providers can offer interpretable explanations for medical predictions, facilitating collaboration between clinicians and AI systems.

In essence, integrating explainability into pre-existing AI systems involves a delicate balance between preserving functionality and enhancing interpretability. Much like transforming a Lego model into a 2-in-1 collectible, these strategies aim to enhance the utility and transparency of complex systems, empowering users to understand and trust AI-driven decisions in various domains.

IV. Stray Pieces & Saving Spares: Ethical Considerations and Future Directions

Ethical Implications of Explainable AI

In the realm of AI development, achieving transparency and interpretability is not just a technical challenge but also an ethical imperative.

- Potential risks and considerations:

- Bias and Fairness: Without transparency, AI models may inadvertently perpetuate biases or discrimination. Hidden biases in AI algorithms can lead to unfair treatment or discriminatory outcomes. Therefore, ensuring transparency in AI systems is essential for identifying and mitigating bias, promoting fairness, and upholding ethical standards.

- Trust and Accountability: Lack of transparency can erode trust in AI systems, hindering their acceptance and adoption. By fostering transparency and accountability, organizations can build trust among users and stakeholders, enhancing the ethical integrity of AI deployment.

Projectile Warning : Models learn from the past and the past isn't perfect

In summary, the ethical implications of explainable AI underscore the importance of transparency and interpretability in AI development. By embracing transparency as a guiding principle and addressing potential risks and considerations, developers and organizations can foster trust, fairness, and accountability in AI systems, ensuring their ethical and responsible use in complex domains.

The Future of Explainable AI

As the development of explainable AI (XAI) continues to evolve, it offers promising opportunities to revolutionize decision-making processes across various industries.

Emerging Trends and Research in XAI

- Deep Learning Interpretability: Researchers are making strides in developing methods to interpret the decisions of deep learning models, which are traditionally considered black-box models. Techniques like Layer-wise Relevance Propagation (LRP), Integrated Gradients, and Attention mechanisms are being explored to understand the inner workings of neural networks.

- Model-specific Interpretability: There's a growing focus on developing interpretability techniques tailored to specific types of models, such as deep neural networks, ensemble methods, and reinforcement learning models. These techniques aim to provide insights into the decision-making process of these models, considering their unique architectures and characteristics.

- Causal Inference: Causal inference methods are gaining traction in XAI research, allowing practitioners to uncover causal relationships between input features and model predictions. By distinguishing between correlation and causation, these methods enable more reliable and actionable insights into the factors influencing model outputs.

- Human-AI Collaboration: The future of XAI lies in fostering collaboration between humans and AI systems, leveraging the strengths of both to improve decision-making outcomes. Human-in-the-loop systems, where humans and AI work together iteratively, enable users to provide feedback, ask questions, and refine the AI's explanations, leading to more interpretable and trustworthy models. This collaborative approach ensures that AI systems complement human expertise rather than replace it.

To Infinity and Beyond! — Buzz Lightyear

Potential Impact on Various Industries

- Healthcare: In healthcare, XAI holds the potential to revolutionize diagnostic processes, treatment planning, and patient care. Interpretable AI models can provide clinicians with transparent explanations for medical predictions, facilitating informed decision-making and improving patient outcomes. By enhancing the interpretability of AI-driven diagnostics, XAI can also mitigate concerns regarding trust and liability in medical settings.

- Finance: In the finance sector, explainable AI plays a crucial role in risk assessment, fraud detection, and investment strategies. Transparent AI models provide financial analysts and regulators with insights into the factors influencing financial decisions, enabling them to identify and mitigate risks effectively. Additionally, interpretable AI algorithms enhance regulatory compliance and accountability, ensuring fair and ethical practices in the financial industry.

- Autonomous Vehicles: In the realm of autonomous vehicles, XAI is essential for enhancing safety, reliability, and user trust. Transparent AI systems provide drivers and passengers with understandable explanations for the vehicle's decisions and actions, reducing uncertainty and improving overall safety. By elucidating the decision-making process of autonomous vehicles, XAI fosters user acceptance and confidence in autonomous driving technology.

In conclusion, the future of XAI holds tremendous promise for transforming decision-making processes across diverse industries. By embracing emerging trends and research in XAI and harnessing its potential impact on various sectors, organizations can unlock new opportunities for innovation, transparency, and trust in AI-driven systems.

V. The Aftermath

In this article, we have delved into the development of explainable AI (XAI) in complex systems, drawing parallels to the versatile nature of collectible toys like Legos. Just as Legos offer endless possibilities for creation and deconstruction, XAI not only enhances decision-making processes but also enables users to understand and interpret AI systems.

We began by exploring the significance of explainability in AI, emphasizing its role in fostering trust, transparency, and accountability. Through a nuanced discussion of key concepts, challenges, and practical implementations, we highlighted the transformative potential of XAI across various industries.

From the emergence of interpretable models to the adoption of model-agnostic methods, XAI continues to evolve, promising to revolutionize decision-making processes in healthcare, finance, autonomous vehicles, and beyond. By prioritizing explainability, organizations can unlock new opportunities for innovation, fairness, and ethical AI practices.

As we look to the future, the importance of developing explainable AI systems remains paramount. By embracing ongoing research, fostering collaboration between humans and machines, and prioritizing transparency, AI practitioners can pave the way for a more trustworthy and accountable AI landscape.

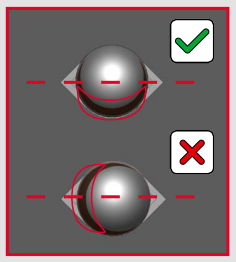

Correct Orientation : Ensuring fairness in AI has never been more important

In conclusion, let us reaffirm our commitment to advancing XAI, recognizing it as not only a tool for improving decision-making but also a cornerstone of responsible AI development. Together, let us prioritize explainability and work towards a future where AI systems are not only powerful but also understandable and interpretable by design.